There are several ways to get external traffic into Kubernetes cluster. In this article we will take a look at the NodePort.

By default, Kubernetes microservices have an internal flat network that is not accessible from the outside of the cluster. NodePort is the most basic way to publish containerized application to the outside world. You can create the Service using K8s CLI:

kubectl expose deployment hello-livefire --type=NodePort --name=livefire-nodeport-svcAlternatively you can use an YAML manifest, like the one below.

apiVersion: v1

kind: Service

metadata:

labels:

app: livefire

name: livefire-nodeport-svc

spec:

externalTrafficPolicy: Cluster

ports:

- nodePort: 31155

port: 80

protocol: TCP

targetPort: 80

selector:

app: livefire

type: NodePortTo expose the service, Kubernetes will allocate specific TCP or UDP port (a nodePort) on every Node from the cluster. The port number is chosen from the range specified during the cluster initialization with the --service-node-port-range flag (default: 30000-32767). If you want to use a specific port number, you can define the value in the YAML manifest (nodePort field).

Then the Service will be accessible on all of the Node’s interfaces, using the interface IP along with the nodePort value.

By default NodePort will be exposed on all active Node interfaces. You can provide specific Node IP, using the --nodeport-addresses flag in K8s “kube-proxy” to be more precise on how the service gets exposed.

For production grade deployment a statically configured external load-balancer will normally will be deployed. Then it is manually configured to send traffic over the Nodes IP addresses and TCP/UDP port assigned for the service (nodePort).

Let’s now have a closer look on how exactly NodePort is being realised

In my previous post (NSX-T and Kubernetes Services – east-west load-balancing with ClusterIP), we examined the Node’s “kube-proxy” process, which is responsible for the K8s Service implementation. In case of NodePort, “kube-proxy” programs Linux IPtables rules , which will manipulate the traffic through series of NAT Chains: PREROUTING, KUBE-SERVICES, KUBE-NODEPORTS and KUBE-SVC-MR3UEIXJ6N3EVTP6 (ref. the below output).

$ iptables -t nat -L -n -v

Chain PREROUTING (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

874 160K KUBE-SERVICES all -- * * 0.0.0.0/0 0.0.0.0/0 /* kubernetes service portals */

...

Chain KUBE-SERVICES (2 references)

pkts bytes target prot opt in out source destination

7 514 KUBE-NODEPORTS all -- * * 0.0.0.0/0 0.0.0.0/0 /* kubernetes service nodeports; NOTE: this must be the last rule in this chain */ ADDRTYPE match dst-type LOCAL

...

Chain KUBE-NODEPORTS (1 references)

pkts bytes target prot opt in out source destination

4 208 KUBE-SVC-MR3UEIXJ6N3EVTP6 tcp -- * * 0.0.0.0/0 0.0.0.0/0 /* default/livefire-nodeport-svc: */ tcp dpt:31155

...

Chain KUBE-SVC-MR3UEIXJ6N3EVTP6 (2 references)

pkts bytes target prot opt in out source destination

1 52 KUBE-SEP-PFYEZUCWPNUF64MI all -- * * 0.0.0.0/0 0.0.0.0/0 /* default/livefire-nodeport-svc: */ statistic mode random probability 0.50000000000

3 156 KUBE-SEP-DXVSSYFQD2YCNSGJ all -- * * 0.0.0.0/0 0.0.0.0/0 /* default/livefire-nodeport-svc: */

...

Chain KUBE-SEP-DXVSSYFQD2YCNSGJ (1 references)

pkts bytes target prot opt in out source destination

3 156 DNAT tcp -- * * 0.0.0.0/0 0.0.0.0/0 /* default/livefire-nodeport-svc: */ tcp to:10.244.2.3:80

...

Chain KUBE-SEP-PFYEZUCWPNUF64MI (1 references)

pkts bytes target prot opt in out source destination

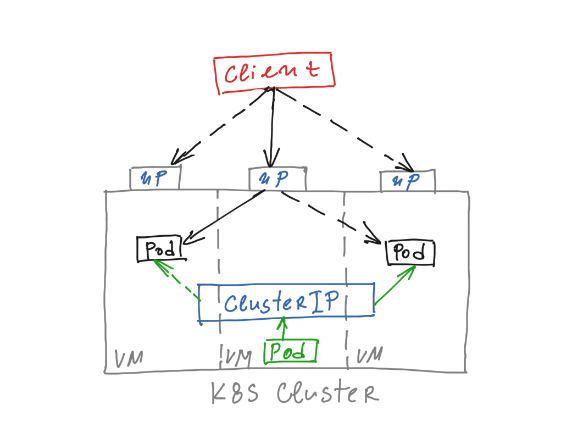

1 52 DNAT tcp -- * * 0.0.0.0/0 0.0.0.0/0 /* default/livefire-nodeport-svc: */ tcp to:10.244.1.3:80IPtables on the Node will randomly select a destination target pods (KUBE-SVC-MR3UEIXJ6N3EVTP6) and then will perform DNAT to the POD IP and target service port (KUBE-SEP-DXVSSYFQD2YCNSGJ or KUBE-SEP-PFYEZUCWPNUF64MI). In case the selected POD is on the same Node traffic is sent directly. If the POD is on another Node traffic will be send, based on the routing information from the preconfigured Kubernetes networking solution (NSX-T, flannel, static routes).

The NodePort creates ClusterIP (10.107.120.59) object as well, which doesn’t apply for traffic coming outside of the Kubernetes cluster (north-south).

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

...

ivefire-nodeport-svc NodePort 10.107.120.59 none 80:31155/TCP 10dHowever it will be used for traffic initiated within the cluster (east-west), which can happen when a Node or a Pod wants to talk to the Service.

$ iptables -t nat -L -n -v

...

Chain KUBE-SERVICES (2 references)

pkts bytes target prot opt in out source destination

...

1 52 KUBE-SVC-MR3UEIXJ6N3EVTP6 tcp -- * * 0.0.0.0/0 10.107.120.59 /* default/livefire-nodeport-svc: cluster IP */ tcp dpt:80NSX-T container networking and NodePort

For traffic initiated outside of the cluster there is no difference in the way NodePort behaves, when NSX-T provides container networking. Traffic DNAT is handled entirely at the Node TCP/IP stack, by IPtables.

The difference comes with the east-west traffic initiated from a Pod (or a Node) to the NodePort ClusterIP – this traffic will be handled by the Open vSwitch flows, instead of Linux IPtables rules (ref. NSX-T and Kubernetes Services – east-west load-balancing with ClusterIP).

A big NSX-T advantage is that the distributed firewall (DFW) can be leveraged to increase the security of the NodePort published service.

Depending of the NSX-T and NCP version in use, take a look at the release notes for caveats: Issue 2263536 – Kubernetes service of type NodePort fails to forward traffic.

Summary

Although NodePort is a quick and easy way to expose microservice outside of the Kubernetes cluster, on my opinion it is more suitable for Test/Sandbox deployments, where the lower cost is a predominant factor, that the service availability and manageability.

Some NodePort drawbacks to consider for production environments are:

- The scale will be limited by the “nodePort” range number, because only one service per port can be configured.

- By default, port range is limited in the rage 30000–32767. High ports usage could be problematic, from security perspective.

- By design, NodePort bypasses almost all network security in Kubernetes.

- If external load-balancer is in use, the service “Heath Check” will be toward the Node IP, instead directly to the Pod one, which is a poor monitoring solution.

- More complex to troubleshoot connectivity issues.

In my next blog we will look at more intelligent way of routing traffic into the Kubernetes, using the LoadBalancer type.