Тhis is my first blog in a series, trying to reveal the Kubernetes Services mystery and how they are implemented when NSX-T is used as a container networking solution.

First things first: What is Kubernetes Service?

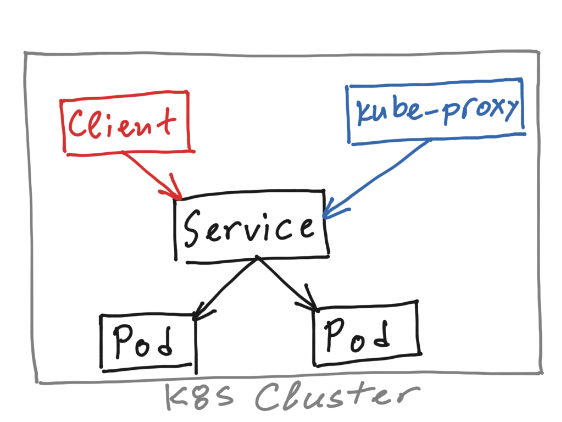

K8s Service helps to connect application together with another applications and users. It binds pods, which provide one and the same service, into a unique logical construct and then expose it to the Kubernetes cluster and/or outside world. The pods are grouped into an Endpoint list, based on a unique selector criteria (label), which they possess as an attribute. The Service is also represented with a DNS name in the Kubernetes dynamic DNS system (CoreDNS) or through environment variable injection.

There are several Service’s types. In this blog we will discuss the base one – ClusterIP.

This is the default Kubernetes Service type. It provides a service within the cluster that other applications (pods) part of the same cluster can access. This type of service will not be accessible from outside. It gives distributed east-west load-balancing system that leaves on all of the K8s Nodes.

How it is natively implemented?

Every K8s Node runs a “kube-proxy” process, which is responsible for implementing the Services. The “kube-proxy” register a “watch” for the addition/removal of Service and Endpoints object. When there is a new Service created or modification of an old one, the API Server will notify “kube-proxy”. The “kube-proxy” will program the Linux IPtables (or netlink ipvs) rules on the Node to capture traffic to the Service’s ClusterIP address and port, and redirect that traffic to one of the Service’s backend sets. For each Endpoints object, separate IPtables rules exist, which select a backend Pod. By default, the choice of backend is random.

Below we have CLI output of K8s ClusterIP service. The name of the service is livefire-svc, with an IP address of 10.107.211.73 and port 80 (http). TargetPort is the port the container (application) accepts traffic on and in our case is the same – port 80 (http). There are three pods in the Endpoints list (10.244.1.4, 10.244.2.2, 10.244.2.3), which have been selected base on the Selector – livefire-demo.

$ kubectl describe svc livefire-svc

Name: livefire-svc

Namespace: livefire

Labels: app=livefire-demo

Annotations:

Selector: app=livefire-demo

Type: ClusterIP

IP: 10.107.211.73

Port: 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.1.4:80,10.244.2.2:80,10.244.2.3:80If we check the iptables ruleset on one of the Nodes, we will see three chains related to the Service:

$ iptables -t nat -L

Chain KUBE-SERVICES (2 references)

target prot opt source destination

...

KUBE-SVC-PUXF6NQGEM6L36VE tcp -- anywhere 10.107.211.73 /* livefire/livefire-demo-rc: cluster IP */ tcp dpt:http

Chain KUBE-SVC-PUXF6NQGEM6L36VE (1 references)

target prot opt source destination

KUBE-SEP-IE63CKU5OUEDJOFZ all -- anywhere anywhere /* livefire/livefire-demo-rc: */ statistic mode random probability 0.33332999982

KUBE-SEP-G5KIHW44JZP547LH all -- anywhere anywhere /* livefire/livefire-demo-rc: */ statistic mode random probability 0.50000000000

KUBE-SEP-RULIYA7EFF24T6SQ all -- anywhere anywhere /* livefire/livefire-demo-rc: */

Chain KUBE-SEP-RULIYA7EFF24T6SQ (1 references)

target prot opt source destination

DNAT tcp -- anywhere anywhere /* livefire/livefire-demo-rc: */ tcp to:10.244.2.3:80Traffic destined to the Service IP and port (10.107.211.73:80) will match the rule in the KUBE-SERVICES chain and will be send to the service specific load-balancing chain KUBE-SVC-PUXF6NQGEM6L36VE. A random target chain will be selected (for instance KUBE-SEP-RULIYA7EFF24T6SQ), at which the traffic will be DNAT’ed to the pod IP address (10.244.2.3) on original port 80.

Now that we know how the native Cluster IP Service is implemented, let’s see how this is done when NSX-T is used as a container networking solution.

The NSX-T Kubernetes integration solution leverages Open vSwitch (OVS) to provide network pluming for the pods. OVS traffic will bypass the Node TCP/IP stack, which means that the native Kubernetes “kube-proxy” and Linux IPtables on the Nodes, can’t be used to provide connectivity. Because of that the integration introduces a new “NSX kube-proxy” agent, which is delivered as a container. It is a part of the NSX Node Agent pod, which is running one each K8s Node. The task of the “NSX kube-proxy” is to “watch for Services (creation/modification) and to program the corresponding OVS flows.

From Kubernetes perspective the Service will looks the same:

$ kubectl describe svc livefire-nsx-svc

Name: livefire-nsx-svc

Namespace: livefire

Labels: app=livefire-demo

Annotations: <none>

Selector: app=livefire-demo

Type: ClusterIP

IP: 10.108.213.27

Port: 80/TCP

TargetPort: 80/TCP

Endpoints: 10.4.2.2:80,10.4.2.3:80,10.4.2.4:80Checking the OVS flow table, we can see a flow with destination the Service IP and Port (10.108.213.27:80), which has an action equals to “group:5”.

$ sudo ovs-ofctl dump-flows br-int

...

cookie=0x7, duration=170.052s, table=1, n_packets=0, n_bytes=0, priority=100,ct_state=+new+trk,tcp,nw_dst=10.108.213.27,tp_dst=80 actions=group:5Group tables enable OpenFlow to process forwarding decisions on multiple links (load-balancing, multicast and active/standby). If we dump the group table, we can verify the load-balancing backend objects (the pods in the Service Endpoints list).

$ sudo ovs-ofctl -O OpenFlow13 dump-groups br-int

...

group_id=5,type=select,bucket=weight:100,actions=ct(commit,table=2,zone=65312,nat(dst=10.4.2.3:80)),bucket=weight:100,actions=ct(commit,table=2,zone=65312,nat(dst=10.4.2.2:80)),bucket=weight:100,actions=ct(commit,table=2,zone=65312,nat(dst=10.4.2.4:80))As I mentioned above the ClusterIP service is accessible only within the Kubernetes cluster. In my next blog we shall discuss the options to expose a containerised applications outside of the cluster.

Thank you so much fo this post! It explained really well how the nsx-kube-proxy works and it helped me a lot!